|

-

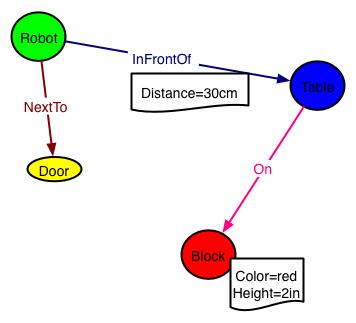

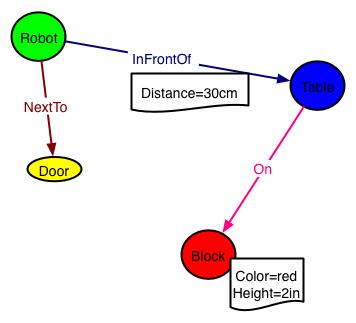

Building task-oriented relational representations for online

learning (McGovern, Lane, Fagg). Reinforcement learning provides a

suite of techniques designed for difficult control

problems yet the methods can be slow to converge and transferring

knowledge to larger problems is difficult. This research will

investigate the use of relational representations as one way to

overcome these issues. By representing the world as a set of objects

and relationships among these objects, agents can learn and

plan at a higher level. Students will develop relational

reinforcement learning methods for simulated and physical mobile

robotics domains. They will focus on developing techniques to

automatically create relational task-oriented knowledge

representations on a per task and per robot basis (Dabney and

McGovern, 2007; de Granville et al., 2006).

- Reinforcement Learning in Relational Environments (McGovern, Lane, Fagg).

Reinforcement learning has traditionally been plagued by slow

convergence rates and the inability to scale to large problem

domains. Recently, relational representations have been advocated as

one possibility for overcoming these barriers. In this project, the

students will investigate this cutting-edge research area by

developing relational reinforcement learning methods for simulated

mobile robotics domains. The students will begin by developing

simulators for such domains that employ relational representations --

e.g., systems that represent the concepts of ``wall'', ``door'',

``obstacle'', ``stair'', etc. directly rather than implicitly via a

traditional tabular transition function. Such a representation can be

dynamically interpreted at runtime to provide concrete transition

probabilities from any specific atomic state and, thus, a simulation

environment.

The

students will also tackle the building of learning agents situated in this

simulation environment and will be introduced to rigorous empirical

methodologies for evaluating the performance of learning agents. In

this phase, they will begin by adapting traditional learning methods

such as Q-learning or SARSA(lambda) into this environment by

instantiating relations to atomic state descriptions and tabulating Q

values for the realized states. Finally, the students will explore

the use of state generalization methods such as relational function

approximation.

- Visualization of Evolving Relational Models (McGovern, Lane, Fagg, Weaver).

A key advantage of using relational representations in reinforcement

learning methods is that their evolutionary states can be readily

depicted in diagram form, opening up possibilities for visually

exploring and analyzing the structure and dynamics of the

reinforcement learning process. Using existing graph visualization

toolkits, students will develop and implement techniques and tools for

interactive visualization of relational reinforcement learning data

traces. Students will use these tools to evaluate and compare specific reinforcement

learning approaches in terms of scalability, convergence, parsimony,

sensibility, and variation as a function of both general learning

strategy and the particular computational parameter settings used

across simulation runs.

|